Product: SAP IPS Information Platform Services (or SAP CMS)

Version: 4.1.x, 4.2.x, 4.3.x

OS: Windows Servers 64-bit

Overview

There is relatively limited information on Java web applications using MS SQL Server's Windows authentication in the internet. Microsoft's JDBC documentation is relatively incomplete and leaves gaps in its installation, and leads to many wrong information around.

SAP IPS, or also called as SAP CMS, is a web application that is running on Apache Tomcat. It uses SAP Java 8 for many years covering SAP IPS v4.1.x until now (v4.3.x) in 2025.

This post mainly to use SAP IPS as an example of web application server to discuss about the proper installation when needs to use MS SQL Server's Windows authentication

Background

Main MS SQL Server JDBC Driver Homepage: https://learn.microsoft.com/en-us/sql/connect/jdbc/download-microsoft-jdbc-driver-for-sql-server?view=sql-server-ver16

As of today, MS SQL Server JDBC version 12.8 is the current version. It supports Java 8, 11, 17, 21, and 22. Moreover, it bundled with 32-bit and 64-bit DLL to be used when the application supports MS SQL Server's Windows authentication. This post mainly focus in this DLL, as improper installation will leads to the Java application, and web application failed to use Windows authentication.

Support of 32-bit Java adds another layer of complexity, where a lot of people is not aware of it, and do not know 64-bit Windows in term of 32-bit Java application support.

Both Microsoft JDBC driver page, as well as Java does not clarify the Windows DLL search order, which often leads to many Internet forums to contain mix messages. You need to be a DLL developer in order to know Microsoft has long documented DLL search order, which now is located in following web page:

- Title: Dynamic-link library search order

- URL: https://learn.microsoft.com/en-ca/windows/win32/dlls/dynamic-link-library-search-order

This page's content is not "formatted" for Java.exe, so anyone who is poor in Windows OS, or never done any Windows programming will easily lost, and most probably think this page is not applicable.

Preparation

1. Identify your java.exe is 32-bit or 64-bit. This could be a complicated process, if you install your own Java and forgotten about whether you download 32-bit or 64-bit Java. For SAP IPS, it bundled with its own 64-bit Java, so this can be skipped

2. If you java.exe is 32-bit, seriously consider to switch to use 64-bit java.exe so that you can leverage more than 4GB RAM (virtual or physical). Considering Windows Server 2016 only offers in 64-bit, and no 32-bit, so it is not sustainable to use 32-bit java.exe. For SAP IPS, this can be skipped, as it is using 64-bit java

3. Determine whether the application uses JDBC 2.0 distributed transactions, which its JDBC class will be SQLServerXADataSource. It is also called as extended architecture, or XA for short. Most Java program will not uses this. SAP IPS does not use this as well

4. Download MS SQL Server JDBC Driver in either zip or tar.gz format

3. Unzip it into a working directory instead of C:\Program Files\Microsoft JDBC DRIVER 12.8 for SQL Server indicated in the tar.gz/zip > install.txt. SAP IPS only needs 1 DLL responsible for Windows authentication, but not the rest of the files

3.1. This JDBC zip file contains both 32-bit and 64-bit DLL to used for Windows authentication called below

3.1.1. enu\auth\x64\mssql-jdbc_auth-12.8.1.x64.dll - used for 64-bit Java

3.1.2. enu\auth\x86\mssql-jdbc_auth-12.8.1.x86.dll - used for 32-bit Java

3.1.3. This Windows DLL version does not need to match mssql-jdbc-<JDBC version>.jre<jre ver>.jar

3.2. The zip file contains DLL for Java XA extended architecture in both 32-bit and 64-bit. SAP IPS does not use it, so do not need to install these DLL. I have not see any Java applications that uses MS SQL Server JDBC XA, so not much to say, except following mapping. I will recommend to rename the DLL filename to include x64 or x86, as default name can't easily tell the different:

3.2.1. enu\xa\x64\sqljdbc_xa.dll - used for 64-bit Java

3.2.2. enu\xa\x86\sqljdbc_xa.dll - used for 32-bit Java

3.3. Determine your Java version. Picks the corresponding JAR file based on your Java version. SAP IPS web application bundled with its own JAR file, so it does not use any of these For other readers who uses Java 8 - 21, see following table mapping based on latest JDBC 12.8.1:

3.3.1. Java 8 - 10: uses mssql-jdbc-12.8.1.jre8.jar

3.3.2. Java 11 and higher: uses mssql-jdbc-12.8.1.jre11.jar

4. Determine how many java.exe is installed and used in the client machine. SAP IPS often only has its own Java, and not using external Java that installed by administrator/developer/user

5. Does the Java application's JAR file bundled with its own JDBC JAR file. If yes, then just need to focus in mssql-jdbc_auth-12.8.1.x64.dll (64-bit) and mssql-jdbc_auth-12.8.1.x86.dll (32-bit) to match the corresponding java.exe bitness

Planning and Installation

1. In the computer that you are setting up, determine how many different version of MS SQL Server daemon/instance your applications, as this related to the JDBC driver, and mssql-jdbc_auth-<version>.<bit>.dll. For SAP IPS, and SAP Data Services, it is often to work with 1-2 version of MS SQL Server. This indirectly implies 1 JDBC driver is required in SAP IPS machine, which often support few MS SQL Server version. SAP IPS does not install this DLL, and administrator needs to manually copy mssql-jdbc_auth-12.8.1.x64.dll (64-bit only). Recommended directory is C:\Windows\system32\ (64-bit only)

2. If the Java applications installed in the same machine required to install multiple different Java JDBC - MS SQL Server JAR file, and different mssql-jdbc_auth.dll, then take note of this

2.1. This implies following files in the same MS SQL Server client machine:

2.1.1. mssql-jdbc-12.8.1.jre8.jar (or jre11) must not resides in jdk\lib\ext\ directory, unless there are multiple Java directory

2.1.2. mssql-jdbc_auth.dll (32-bit and 64-bit) must not resides in C:\Windows\system32\ or C:\Windows\SysWOW64\

3. If multiple Java.exe is used, then decide whether each will has its own JDBC JAR version, and mssql-jdbc_auth-<version>.x64.dll version. I will ignore anyone will use 32-bit java.exe

3.1. If only 1 JDBC client version is required, then copy the JAR file into each of the jdk\lib\ext\, or jre\lib\ext\ directory. This is not required for SAP IPS, or any web application which bundled the JDBC jar file in its WEB-INF\lib\ directory

3.2. If only 1 version of Window authentication DLL is required, then copy the DLL file into C:\Windows

3.2.1. 64-bit: Copy into C:\Windows\system32\mssql-jdbc_auth-12.8.1.x64.dll

3.2.2. 32-bit: Copy into C:\Windows\SysWOW64\mssql-jdbc_auth-12.8.1.x86.dll

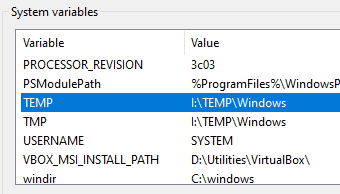

4. If you want to keep a separate directory to keep mssql-jdbc_auth-<version>.<bitness>.dll, then edit Windows system environment variable, and set PATH to include this custom directory. I will recommend this as well, but this is a global setup, and will affects all java to use the same DLL in this machine

Troubleshooting

Troubleshooting any applications that use MS SQL Server Windows authentication will require to troubleshoot using the specific Window username. This is often a ActiveDirectory domain user account. ActiveDirectory's user group policy can further configured to prevent the user from login interactively (using Windows login screen).

Due to user group policy could disable interactive login to the Windows machine, troubleshooting will require to ask ActiveDirectory admin to temporary enable/allow interactive login. This page assumes the ActiveDirectory user is able to login to Windows, or uses RunAs command to run CMD to perform troubleshooting.

Starts the troubleshooting using following 2 methods to login as the Windows user who is authorized to login to MS SQL Server (Windows authentication):

1.. In Windows Login screen, login as the Windows user who used to start the Java. In this example, SAP IPS/CMS

2. Login to Windows as your personal username, or other username that allows to login to the SAP IPS machine. In Start menu, type CMD > right-click > Run As Other User > enter the Windows user who used to start Java. You will get Command Prompt, with the username shown as the one you just entered

Once you reached this, the rest of the steps are relatively easy for Java application. I will use the wording "authorized Windows user" to indicate the Windows username authorized to login to MS SQL Server in the rest of the procedure.

1. Download full MS SQL Server JDBC file as zip (Windows) from official homepage of https://learn.microsoft.com/en-us/sql/connect/jdbc/download-microsoft-jdbc-driver-for-sql-server?view=sql-server-ver16

2. As of today, JDBC version 12.8.1 is compatible with Java 8 - 21, and MS SQL Server version 2016 - 2022

3. If the Java program needs to connects to MS SQL Server 2014, then download JDBC version 12.6 or older (minimum 3.0)

4. Extract sample Java login program from the zip file

4.1. Directory name: sqljdbc_12.8 > enu > samples > connections > ConnectURL.java

5. Modify ConnectURL.java with the MS SQL Server login, e.g. hostname, port, DB name

5.1. Modify line 30: String connectionUrl = "jdbc:sqlserver://<server>:<port>;databaseName=AdventureWorks;user=<user>;password=<password>";

5.1.1. Fill in <server> as the MS SQL Server hostname

5.1.2. Fill in <port> as the MS SQL Server port number

5.1.3. Replace AdventureWorks as the MS SQL Server logical database nane. If there is no DB, then master DB will always be there

5.1.4. Removes ";user=<user>;password=<password>"

5.1.5. Append ";integratedSecurity=true" after the databaseName parameter, e.g. "jabc:sqlserver://......databaseName=master;integratedSecurity=true;trustServerCertificate=true"

5.1.6. The parameter "trustServerCertificate=true" is optional, but many DBA has configured to force SSL, as well as used self-sign SSL certificate. More description can be found in Learn.Microsoft.com: https://learn.microsoft.com/en-us/sql/connect/jdbc/connecting-with-ssl-encryption?view=sql-server-ver16

6. Compile the Java program. Let's keep the filename as ConnectURL.java. For SAP IPS, please uses Java 8. e.g. AdoptOpenJDK, Oracle Java, Amazon Java 8

6.1.<JAVA JRE 8 PATH>\bin\javac ConnectURL.java. You can use either 64-bit or 32-bit as this is a small program that won't use up 4GB RAM

6.2. You will see new file ConnectURL.class in the current directory

7. Run the ConnectURL.class program with debug mode. This step will provides more helpful information in troubleshooting the login issue

7.1. Copy the MS SQL Server JDBC JAR file into the same directory as ConnectURL.class

7.2. Copy mssql-jdbc_auth-12.6.2.x64.dll into the same directory as ConnectURL.class. SAP BODS 4.2SP3PL2 bundled with JDBC MS SQL Server v12.6.2, so I will use this version. You will download and test based on the version you use

7.3. Runs Java.exe v8 64-bit to run the program. Uses following syntax:

7.3.1. Command: java -Djavax.net.debug=ssl --classpath mssql-jdbc-12.6.2.jre8.jar:. ConnectURL

7.4. If you failed, you can test with different scenarios like below:

7.4.1. java.exe 32-bit or 64-bit

7.4.2. java.exe on different version, e.g. 8, 9, 10, 11, all the way to 21

7.4.3. different JDBC JAR file for mssql-jdbc-<JDBC version>.jre8.jar

7.4.4. different javax.net.debug, such as only shows SSL handshake - "java -Djavax.net.debug=ssl:handshake --classpath mssql-jdbc-12.6.2.jre8.jar:. ConnectURL"

7.4.5. has multiple copy of ConnectURL.class which compiled with different Java.exe version

7.5. Tries different combination so that you fully understand the issue is in

7.5.1. java.exe - 64-bit, distribution of the java.exe, e.g. SAP Java, IBM Java, Oracle Java, AdoptOpenJDK Java, Amazon AWS Java, RedHat Java

7.5.2. mssql-jdbc-<version>.jre8.jar - could be different JDBC version, jre8.jar or jre11.jar

7.5.3. mssql-jdbc_auth-<version>.x64.dll - Microsoft never say whether the DLL version must match JDBC JAR, so you can try different version that bundled with 3rd party software, e.g. SAP BODS 4.3SP3PL3 bundled with mssql-jdbc_auth-12.6.0.x64.dll with mssql-jdbc-12.6.2.jre8.jar (different version)

7.5.4. OS, machine - easy to copy this to any machine, laptop, server, gateway so that you can repeat this test easily

7.5.5. Copy mssql-jdbc_auth-<version>.x64.dll into a dedicated directory, e.g. D:\ReportServer\JDBC\MSSQLServer12.6.2\, and modify PATH to include this directory (must change PATH to points to the directory where this DLL located)

7.5.6. For above PATH, you can replace it by specifying parameter "-Djava.library.path=D:\ReportServer\JDBC\MSSQLServer12.6.2"

8. mssql-jdbc_auth-<version>.x64.dll - Some recommendations to perform more troubleshooting in this section. Removes mssql-jdbc_auth-<version>.x64.dll from current directory, and place it in different directories based on the application that you work on

8.1. Example #1, SAP IPS/BODS placed it in C:\Windows\system32\ (for 64-bit DLL) and C:\Windows\SysWOW64\ (for 32-bit DLL)

8.2. Example #2, <java JRE>\lib\ext\ directory, which is the recommended directory to store external JAR. Some developers or 3rd party vendors recommend to put into the application's JAR file, while I recommend to put into dedicated Java directory in its lib\ext for ease of security patching, and upgrade by isolating the Java application from popupar vendor, like MS SQL Server

8.3. Example #3, put it in your own Java JAR's WEB-INF\lib\ directory

8.4. Do not place 32-bit of DLL, e.g. mssql-jdbc_auth-12.8.1.x86.dll into C:\Windows\SysWOW64\. Window's directory architecture design for this directory is for 32-bit application in 64-bit Windows (in other word, in 32-bit Windows 10, SysWOW64 directory doesn't exists, and you need to put the 32-bit DLL into C:\Windows\system32\ directory). Java 64-bit application will work with this DLL in SysWOW64, as it is ignoring DLL in 32-bit SysWOW64 directory, even it exits

8.5. Do not place 64-bit of DLL, .g. mssql-jdbc_auth-12.8.1.x64.dll into C:\Windows\system32\ for Windows 64-bit. This will leads to Java 64-bit failed to work in its Windows authentication, and you will required an OS

9. By now, you will know the relationship of mssql-jdbc_auth-<version>.x64.dll, 32-bit Java, 64-bit Java, JDBC JAR version, SSL handshake, C:\Windows\system32\ for DLL (if using 64-bit Java.exe), and C:\Windows\SysWOW64\mssql-jdbc_auth-<version>.x86.dll (if using 32-bit Java.exe)